· Computational Creativity · 3 min read

Exploring Text Generation with The Iliad - Training and Evaluating AI Models

This week, I focused on training a text generation model using The Iliad by Homer. The model was trained across different epochs to generate and predict phrases based on user input. Additionally, I explored a musical note generator and evaluated its output.

Overview

This week, I embarked on a project to train a text generation model using The Iliad by Homer. By leveraging a text file of The Iliad from Project Gutenberg, I was able to integrate it into Google Colab to train the model. The process involved multiple training iterations with varying epochs to evaluate how the number of epochs impacts the quality of the generated text.

Text Generation Model Training

Dataset Preparation

I began by downloading the text file of The Iliad from Project Gutenberg. This text file served as the training dataset for the text generation model. The model used was sourced from here: https://github.com/ml5js/training-charRNN, and the training was conducted on Google Colab. The model is a Multi-layer Recurrent Neural Network (LSTM, RNN) for character-level language models in Python using Tensorflow and is modified to work with tensorflow.js and ml5js.

Training Iterations and Challenges

First Attempt - 10 Epochs:

- Setup: Initially, I trained the model using 10 epochs.

- Outcome: The training ran smoothly without errors, and the model was able to generate coherent phrases based on user input.

Second Attempt - 100 Epochs:

- Setup: I increased the number of epochs to 100, expecting improved accuracy and more refined text generation.

- Challenges: The training process took almost two hours, and I encountered runtime GPU errors. Specifically, the T4 GPU I selected was not being fully utilized, which slowed down the training significantly.

- Adjustment: To save time, I switched processing to using my normal CPU instead of GPU and reduced the dataset to 6,000 lines of The Iliad. This resulted in faster training, and the model’s output remained relatively consistent in quality.

Final Attempt - 200 Epochs:

- Setup: For the final iteration, I trained the model with 200 epochs using the shorter dataset.

- Outcome: While the training took much longer, the resulting model produced slightly more accurate and cohesive text predictions. The differences between the 100 and 200 epochs models were subtle but noticeable, particularly in terms of accuracy and text fluency.

Model Deployment and Testing

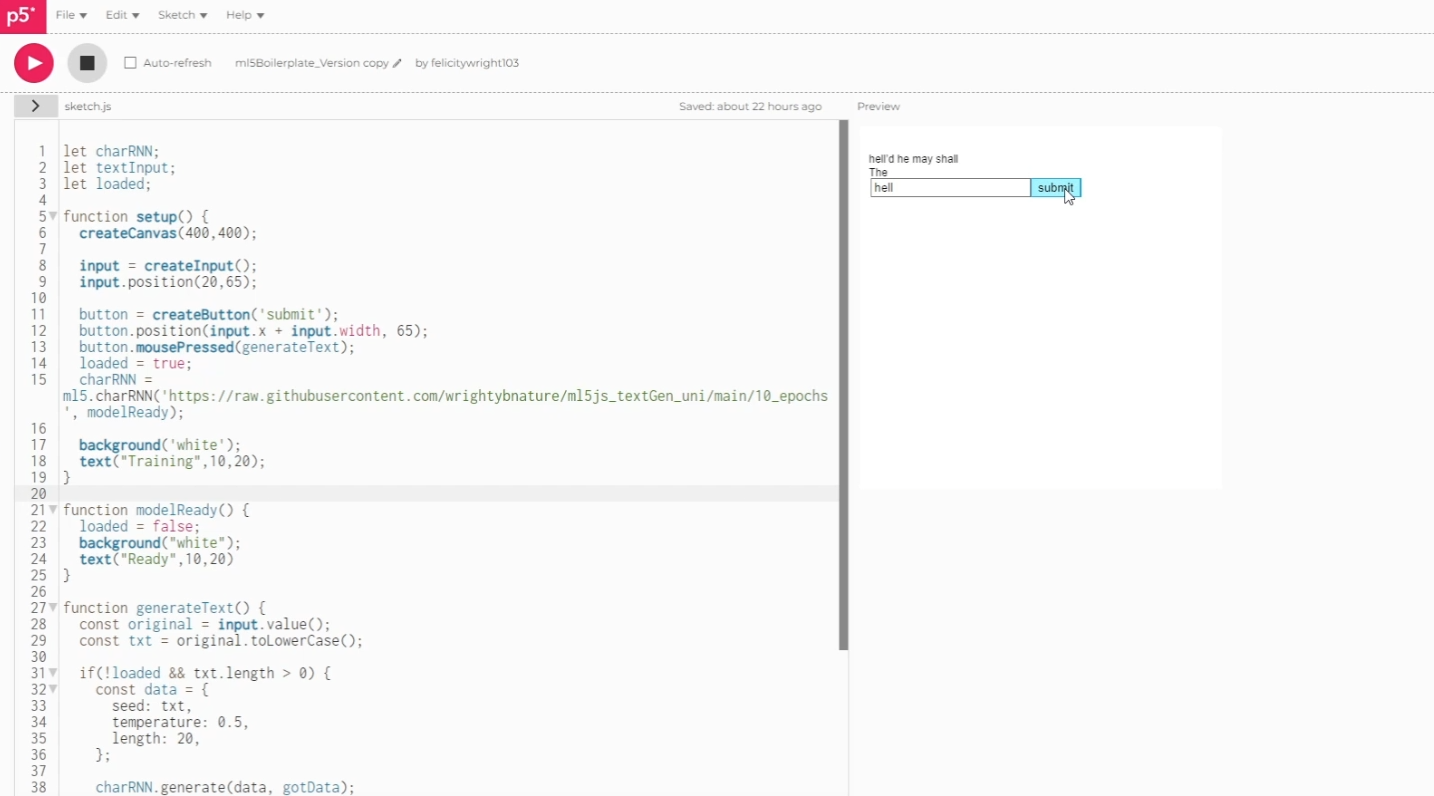

After training the model, I uploaded it to my GitHub and utilized the p5.js web editor with ml5.js to generate and display predicted phrases based on user input. The model’s performance was evaluated by testing it with various word inputs, and the results were displayed in the web editor.

Code

let charRNN;

let textInput;

let loaded;

function setup() {

createCanvas(400,400);

input = createInput();

input.position(20,65);

button = createButton('submit');

button.position(input.x + input.width, 65);

button.mousePressed(generateText);

loaded = true;

charRNN = ml5.charRNN('https://raw.githubusercontent.com/wrightybnature/ml5js_textGen_uni/main/200_epochs', modelReady);

background('white');

text("Training",10,20);

}

function modelReady() {

loaded = false;

background("white");

text("Ready",10,20)

}

function generateText() {

const original = input.value();

const txt = original.toLowerCase();

if(!loaded && txt.length > 0) {

const data = {

seed: txt,

temperature: 0.5,

length: 20,

};

charRNN.generate(data, gotData);

}

}

function gotData(err, result) {

if (err){

console.error(err);

return;

}

background('white');

text(input.value().toLowerCase() + result.sample, 10, 40);

}

Reflection

This week’s work highlighted the importance of model training parameters, such as the number of epochs, in determining the quality of AI-generated text. The experiments with The Iliad demonstrated how increasing the training epochs can lead to more accurate and refined predictions. However, I am also aware that “overfit” can occur from training the model on too many epochs. This can make the model too strict and perform poorly when trying to generate from unseen text (like the user’s input).

Video Explanation

Below is a short video where I walk through the process of training the text generation model, discuss the challenges encountered, and showcase the model’s performance in generating text from The Iliad.